¶ Text Classification

AI categorizes text content by classifying topics or identifying responsible staff mentioned in the text.

Beta version: The platform is in a development stage of the final version, which may be less stable than usual. The efficiency of platform access and usage might be limited. For example, the platform might crash, some features might not work properly, or some data might be lost.

¶ Training model

For “Customized AI" mode: you are required to prepare only a dataset for training in case you want to train your model.

¶ Input

The input data should be in the format of a ‘comma separated value’ file (.csv format). In other words, the input data is shown as a table of data separated by “,” or a comma sign. After having uploaded the dataset, a user is required to select types of data for each column. The detail of possible column types is presented as follows:

- Sentence contains a sentence of text.

- Label contains a corresponded label.

* This model version only supports one sentiment per sentence. - Train/Test (Optional) identifies the purpose of each row used in the training process, which can be “Train” and “Test” (for more detail about splitting dataset, please go to ‘Train/Test split’ section).

* If the Train/Test column does not assign, ACP will automatedly split a dataset into 70:30 (Train: Test). You can adjust the ratio in the "Train/Test Split" on “Build new model” process.

¶ The quality of data

Since text classification is a statistical-based machine learning model, an optimal amount of training data is required to ensure model performance. Moreover, the quality of data, as well as the number of samples per class is important. If the model is trained on a dataset that is too small, the model could potentially overfit, which might drop model performance. Likewise, when the number of samples per class is unbalanced, the model will be most inclined to poorly predict minority class. For example, if the news topic dataset contains 90% political news and 1% science news, it is unlikely that the model can capture the essence of science news.

¶ How to prepare input Data

- Collect text and label from your existing source.

- Filter out texts with the following criteria:

- Contain emojis/emoticons for more than half of its characters.

- Contain foreign characters for more than 5 contiguous characters.

- Illegible language including randomly written words.

- Recheck labels with the following guideline:

- There is a corresponding label for each text, if not, remove the entire row out.

- There is only one label per text, if not, remove the entire row out.

- The label should be related to its text, if not, reannotate the text.

- There is only one spelling for each label (case-sensitive), if not, reannotate to make the label consistent.

- The amount of data for the class with the lowest number of texts should not be less than 5% of the class with the highest number of texts, if not, either remove the low label or loop back to the first step to collect more data.

- Format data to our required format; see in “input” section.

- Lastly, while having a lot of inputs is generally beneficial for the model performance, ACP that automates model-making process has limited capacity. If your input data turns out to be larger than 1GB, consider directly contact us for more insight and specific model training schemes to make the most out of your precious data.

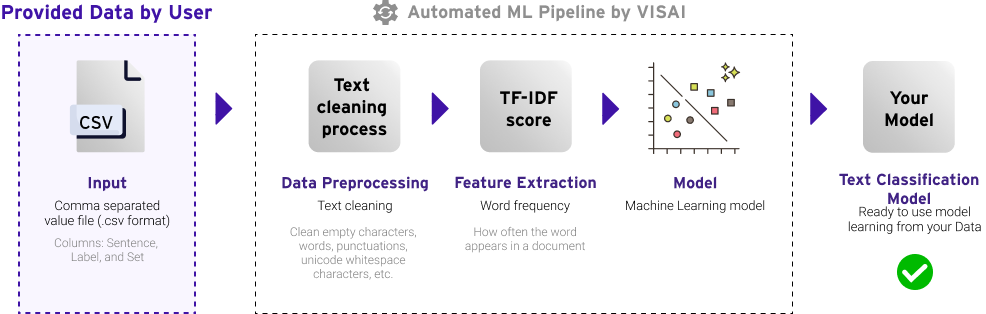

¶ Data preprocessing

Prior to extracting features and feed vectors to a model, the text will be preprocessed according to the following rules:

- Remove HTML tags

- Reorder vowels to correct Thai word misspelling

- Format spaces and new lines appropriately

- Remove brackets

- Format URL links

- Format repeated text, words

- Ungroup all emojis

All these preprocessing methods are done by using PythaiNLP library. Additionally, we also use a Thai word tokenization model called newmm which can also be found in the PythaiNLP library.

¶ Feature extraction

In this model pipeline, the TF-IDF was used as a feature extractor. The goal of feature extraction is to convert the text sentence into a set of numbers that we called “feature”. To do so, the feature extractor counts the word frequency of each sentence and weights each count by the frequency of the whole document. This ensures that the feature would take some stop words into account when extracting features. Stop words are words that appear frequently but convey no important information about the sentence, e.g., ‘มี’ or ‘การ’.

¶ Model

The text classification model uses a Machine Learning model calculated from TF-IDF from Thai language Text to predict the probability of provided label. The ACP ML pipeline for text classification would automatically choose the suitable method to process the TF-IDF data, including finding the cutoff for words that appear too frequently or too scarce. Finally, the model is built by perceptron algorithm on the preprocess data, which is one of the light and robust machine learning algorithms. Thus, the model is simple and fast but yields good performance.

¶ Model Evaluation

For a text classification, we use accuracy, F1 score, precision, recall and confusion matrix to evaluate a model. Generally, higher accuracy, F1 score, precision, and recall mean better performance.

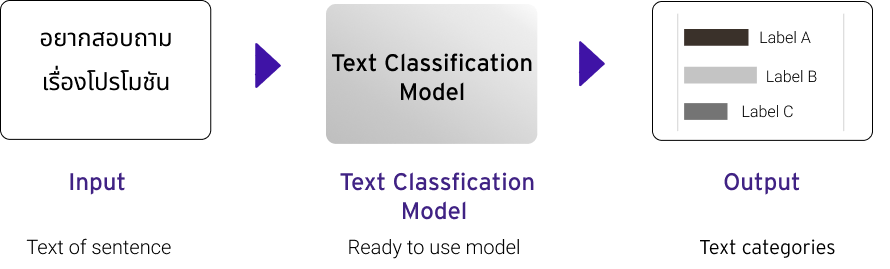

¶ Using approach

¶ Input

the text classification model receives list of sentences as an input. The API JSON input format as shown below.

{

"inputs": [

"sentence-1"

, "sentence-2"

, "sentence-3"

...

]

}

¶ Output

The response of the text classification model API will be a list of JSON where each element is another key-value object that contains its sentence and its predicted probability of each class. The API response would be in the following JSON format:

[

{

“text”: <sentence-1>,

“results”: {

“Label A”: <prob-labelA-of-sentence-1>,

“Label B”: < prob -labelB-of-sentence-1>,

“Label C”: <prob-labelC-of-sentence-1>,

…

}

},

…

]

In this case, we used the news categorization model as an example. The response of the classification model API would be in the following JSON format:

[

{

“text”: < -an unlabeled news article- >,

“results”: {

“Political News”: <prob- Political -of-sentence-1>,

“Sport News”: < prob - Sport -of-sentence-1>,

“Entertainment News”: <prob- Entertainment -of-sentence-1>,

…

}

},

]