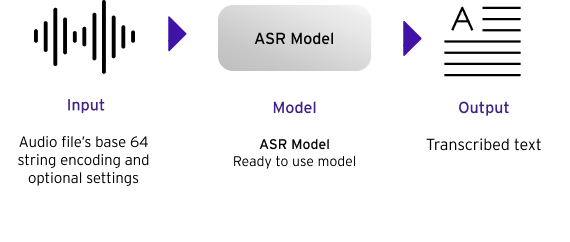

¶ Speech to Text

AI can transcribe speech into text, supporting multiple file types such as mp3, wav, and flac.

Beta version: The platform is in a development stage of the final version, which may be less stable than usual. The efficiency of platform access and usage might be limited. For example, the platform might crash, some features might not work properly, or some data might be lost.

¶ Training model

¶ Model

The Speech to Text, also known as Automatic Speech Recognition (ASR), uses a deep learning model trained on mel-filterbank to enrich both temporal and frequency data. Mel-filterbank is extracted from audio file in a .wav format. The model was optimized by using the CTC Loss to learn the alignments of the probability distribution to the provided annotation.

¶ Model Evaluation

In evaluating a speech-to-text model, we utilize WER (Word Error Rate) and CER (Character Error Rate). Typically, a lower WER and CER indicate superior performance. On the other hand, for assessing a model's performance in speech diarization, we employ DER (Diarization Error Rate). Similar to WER and CER, a lower DER signifies better performance.

¶ Using approach

¶ Input

The model receives mel-filterbank as an input. However, the users are required to send only the audio file (support .wav .mp3 . ogg .flac and etc.); our backend will handle the feature extraction. Note that the input audio should meet the following criteria: mono-channel recording, and sampling rate of 8 kHz or greater. Users can upload multiple audio files to API with optional settings described below.

{

"files_speakers": multi-part form data (Optional)

}¶ Optional settings

- files_speakers

Users can provide speaker files to identify who spoke in each sentence of a given audio recording. A maximum of 5 speaker files can be submitted, with each file not exceeding 20 MB in size. - boosting_words

Users can input up to 10 boosting words to enhance the transcription of a given audio recording.

¶ Output

The output of the model is a list of transcribed text that corresponds to speech detected in each audio file. The API will segment the audio if it has duration longer than 16 seconds or has silence longer than the threshold. The transcribed text is predicted using a specified decoding method. Different decoding method yields different transcription.

Code snippet below shows an example of JSON response obtained when using this API:

{

"results": [

{

"filename": <filename>,

"duration": ":<time (sec)>,

"predictions": [ // list of outputs corresponds to speech detected in audio file

{

"transcript": <sentence>,

"start_time":<time (sec)>,

"end_time":<time (sec)>,

"speaker":< speaker name >, // optional

},

... // continue to next segment results

],

},

... // continue to next file

]

}